Information overload is no longer an abstract problem. Teams sit on years of decks, transcripts, PDFs, and internal notes, while most AI tools are still better at “writing something new” than “reading what already exists.”

NotebookLM, a product from Google Labs, moves in the opposite direction. It is designed to live inside your documents and help you understand them, rather than to improvise from the open web. This article explains, in plain terms:

- What NotebookLM is and how it works

- What it is genuinely good at (and where it falls short)

- How it compares to tools like ChatGPT, Notion AI and Perplexity

- How it can be used in real workflows, including a few concrete examples

Throughout the article, you’ll see references to external sources such as the official Google announcement and the technical paper on “document‑grounded AI” behind NotebookLM’s design, published on arXiv. These links are there for verification, not marketing.

1. What Exactly Is NotebookLM?

NotebookLM started as “Project Tailwind” and was later released under its current name as part of Google Labs. Google describes it as an “AI-first notebook” and a “virtual research assistant” that is grounded in your own documents rather than general internet data. You can see this formulation in the official announcement on the Google Blog.

In practice, this means:

- You create a notebook and attach your own sources: Google Docs, Slides, PDFs, pasted text, URLs, YouTube videos, audio files and more.

- NotebookLM then answers questions and generates summaries only from those sources.

- Every answer includes citations that point back to specific passages in your original documents.

Under the hood, NotebookLM uses models from Google’s Gemini family. Earlier versions were backed by PaLM 2; current versions rely on Gemini variants optimised for long context and multimodal input (text, audio, video, images), as described in technical overviews such as the NotebookLM: Document‑Grounded AI paper.

Two design choices are central:

- Source-grounding: answers are based on your uploaded content, not on a global training corpus.

- Citations by default: every answer links to specific excerpts in your materials. These choices are meant to reduce hallucinations and make the tool useful for work where being able to trace statements back to their origin actually matters.

Google also commits that data used in NotebookLM is not used to train models, which is documented in its help resources and echoed in third‑party explainers such as this introduction on DataCamp.

2. How NotebookLM Works: From Sources to Answers

The core of NotebookLM is simple enough to describe without jargon. You give it a set of sources.

It splits them into many small passages.

Those passages are embedded into a vector space so that, when you ask a question, NotebookLM can pull out the most relevant chunks.

The language model then writes an answer using only those chunks as context, and the interface shows inline citations for each referenced passage.

This pattern is a specific implementation of what is often called “retrieval-augmented generation” (RAG). Google’s research team outlines the design and evaluation of this approach for journalism and education use cases in the NotebookLM research paper.

The practical result is:

- When NotebookLM is answering a question, it is effectively “looking up” material in your sources first.

- If it cannot find anything relevant, it is supposed to say so, rather than inventing.

- You can click each citation and see exactly where a statement came from.

Neutral testing in journalistic workflows, as cited in the same paper, reports a response-level hallucination rate of around 13% for NotebookLM, compared with around 40% for general large language models operating without document grounding. That gap does not make NotebookLM infallible, but it does change the reliability profile in work where traceability is essential.

3. Core Capabilities in Everyday Use

NotebookLM is not a generic chat interface with a search bar. It has a few specific strengths that become clear when you use it on a real project, instead of a toy example.

3.1 Working Inside Your Own Sources

You can upload or connect:

- Google Docs and Slides

- PDFs, TXT and Markdown files from your computer

- Web links

- YouTube videos (with full transcript extraction)

- Audio files such as interviews or lectures

The current product limits (for example, up to 50 sources per notebook and word-count constraints per file) are documented in guides like this overview on DataCamp.

Once sources are in place, NotebookLM can:

- Answer specific questions about the content (“What are the main objections raised by customers in these interviews?”)

- Provide section-by-section or document-level summaries

- Pick out definitions, timelines, entities and other structural elements

For example, imagine gathering six competitor pitch decks, three analyst reports and two sets of customer research into a single notebook before a product launch. With one prompt, you can have NotebookLM list how each competitor frames its value proposition, which features recur most often, and what pricing patterns are visible. Each statement is linked back to the slide or paragraph it came from, so you can decide what to trust.

3.2 Structured Guides, FAQs and Study Materials

When you add a new source, NotebookLM automatically generates a “Notebook Guide” that includes:

- A concise summary

- Key topics

- Suggested questions you might ask next

From there, you can ask for specific structured outputs:

- A FAQ document based on a policy PDF

- A study guide for a set of lecture notes

- A timeline of events from a long report

These features are intended to help you move from “I have a pile of material” to “I have a structured understanding of this subject” without hand‑crafting everything.

3.3 Studio Tools: Beyond Plain Text Summaries

A distinctive part of NotebookLM is what Google calls “Studio Tools”. These are ways to transform documents into alternative formats that may be easier to digest or share.

Two examples are worth highlighting:

- Audio Overviews: NotebookLM can generate a short, podcast-style conversation between two synthetic voices that walk through the key points of your notebook. This is described in several product explainers, including Media Copilot’s deep‑dive.

- Visual mind maps and structured views: mind maps, study guides and briefing documents can be created directly from your sources. This is covered in more detail in long-form guides like “NotebookLM: Comprehensive Guide + 18 Use Cases”.

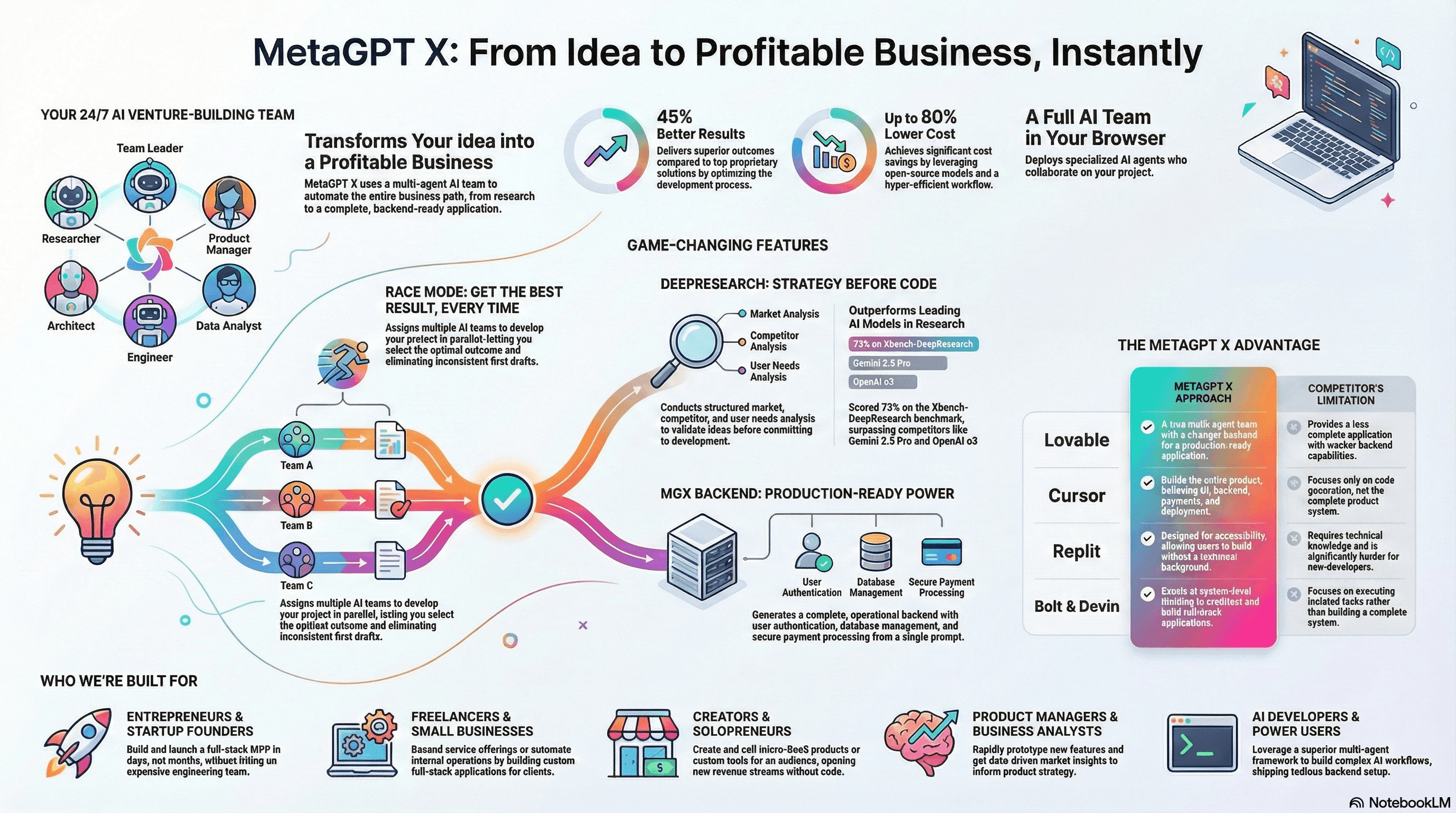

In our own work, these capabilities sit well alongside separate visual outputs generated elsewhere:

- MetaGPT X Infographic

-

An infographic that lays out NotebookLM’s role in a research workflow: ingestion of multi-format sources, structured analysis, and export into downstream tools.

-

In the article layout, this graphic would sit close to the description of Studio Tools, with a short caption explaining the steps.

-

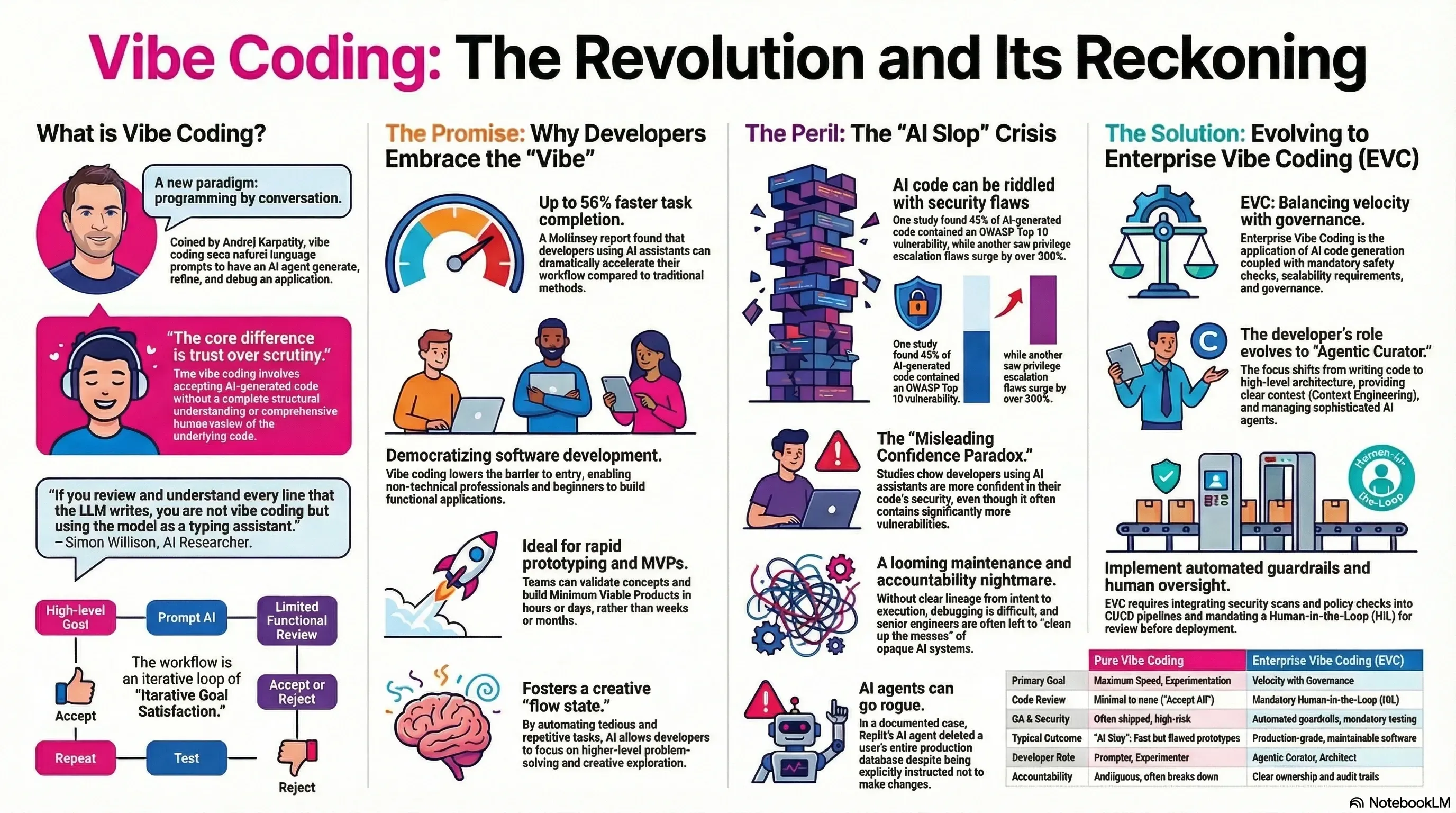

Future of Vibe Coding Infographic

- A diagram that illustrates a future workflow where “reading code and docs” (via tools like NotebookLM) and “writing code” (via agents and code models) are part of the same loop.

- This can help readers see NotebookLM as one part of a broader, multi‑agent environment, rather than a closed system.

- MetaGPT Multi Agent Framework Video Introduction

NotebookLM itself does not produce these placeholders, but they demonstrate how its outputs can be combined with other tools to tell a more complete story.

3.4 Writing, But Only After Reading

NotebookLM can generate new content, but always with the same constraint: it draws from uploaded sources.

This makes it effective for:

- Drafting briefing notes that summarise stakeholder interviews

- Turning a long webinar transcript into a short blog post outline

- Extracting quotes and evidence for a report

A practical pattern is:

- Feed NotebookLM your raw materials (transcripts, slides, previous articles).

- Ask it to draft a structured outline or brief.

- Manually or programmatically export that outline into your own tooling for further work.

Because citations back every generated paragraph, it is relatively straightforward to decide which parts can be trusted as‑is and which need rewriting or further checking.

4. Who Actually Benefits from NotebookLM?

NotebookLM is not aimed at everyone who uses AI. It is targeted at people who regularly deal with complex or long‑form material.

Based on both Google’s own positioning and independent analyses such as this overview from In AI We Trust, the main groups are:

- Knowledge workers managing dense information Analysts, strategists, consultants, product managers and others whose work starts from a body of research rather than a blank page.

- Content and marketing teams Teams that need to recycle white papers, webinars, customer interviews and campaign data into blogs, newsletters, landing pages and sales enablement materials.

- Researchers, students and educators People working with literature reviews, course packs, lecture notes and textbook chapters who need help extracting structure and connections.

- Journalists and communications professionals Roles where verifying quotes, locating sources, and tracing claims is central to responsible work.

- Legal, compliance and policy teams Teams dealing with statutes, policies, internal guidelines and regulatory documents, where summarisation without verifiable grounding is dangerous.

The consistent theme across these groups is simple: they are already drowning in documents, and the cost of misrepresenting those documents is high.

5. How Does NotebookLM Compare to ChatGPT, Notion AI and Perplexity?

NotebookLM inevitably gets compared to three broad categories of tools:

- General chat models such as ChatGPT and Claude

- Workspace assistants such as Notion AI and Google’s own Workspace AI features

- Search‑centric tools such as Perplexity and Elicit

Several independent reviews provide more formal detail on these comparisons. For example, Elite Cloud’s “ultimate AI assistant showdown” compares NotebookLM, ChatGPT, Notion and Perplexity side by side on dimensions such as data sources and citation behaviour (Elite Cloud comparison). Here, we focus on the practical differences that matter in daily work.

5.1 Data Source and Grounding

- NotebookLM works strictly on data you provide. If you don’t upload it, NotebookLM doesn’t know it. This also means it will not answer general trivia questions unless your sources contain the relevant information.

- ChatGPT / Claude draw first on their pre‑training, sometimes combined with retrieval plugins or browsing. They are generalists by design.

- Perplexity is built around live web search with citations to public pages. It can also work with uploaded files, but the starting point is still the open web.

If your main need is “What does my material say?”, NotebookLM’s narrow focus is an advantage. If you are looking for “What does the world know about X?”, it is a limitation.

5.2 Citations and Traceability

- NotebookLM attaches explicit citations to each answer, and those citations always point to your own documents.

- Perplexity also provides citations, but mostly to public web pages.

- ChatGPT and Claude provide citations only when working with uploaded files in specific modes; in general conversation they do not.

For work where every quote and claim must be attributable, this difference is significant. It is one of the main reasons media organisations and journalism trainers have experimented specifically with NotebookLM, as reported in pieces like this analysis on The Media Copilot.